*Warning: May need to rename some columns if you reference them in the filter(s). I've found column names with underscores _ cause errors.

Dataset For Examples

For our examples we will use a dataset of 2000 customer food orders with associated invoice file names.

We will also use 100+ customer contact records and an array of about 4000 invoice numbers.

Python Filter Example

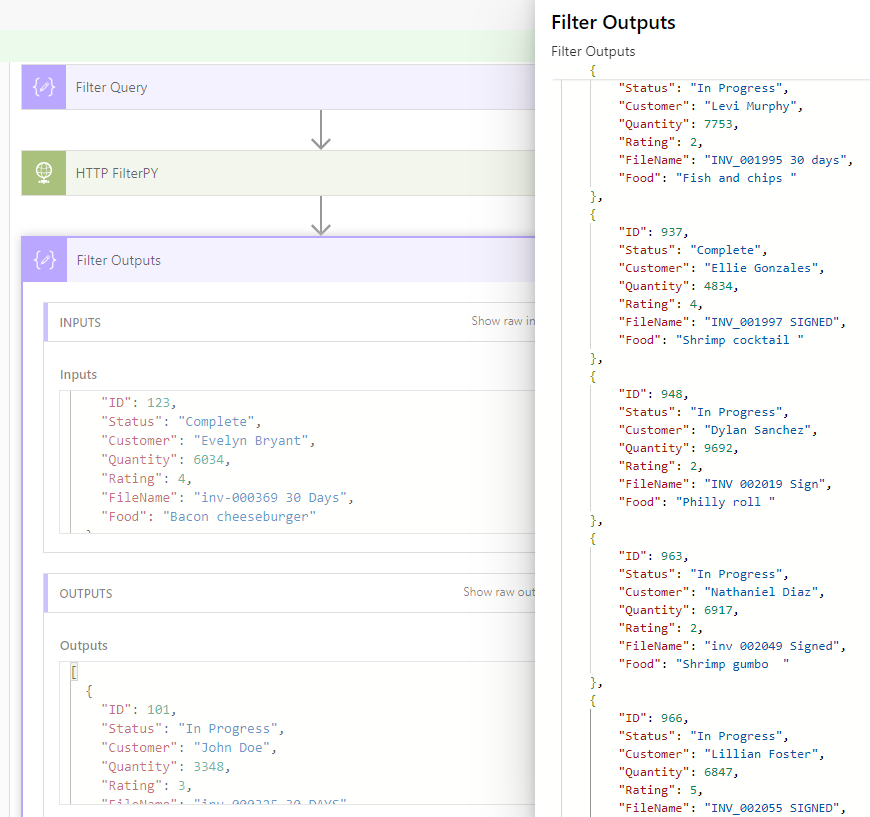

Here we see our FilterPY action takes a filter_query conditional query string & a filter_from JSON array / table of data (In this case our SampleData) to filter with the query string.

We can enter a Python query like...

where we can reference columns in the filter_from JSON array with item['InsertColumnName'] and we can use operators like ==, !=, >, >=, <, <=, or in and we can use Python expressions & functions to modify the data like .upper() and use more advanced functions like regex(r'InsertPattern', InsertColumnName) that returns true if regex finds at least one match in a column or returns false if it doesn't. (Navigate through several lists & descriptions of built-in Python functions at

W3 Schools.)

So if we run this Python Filter with our SampleData & the above query, we get...

And note, you can add / customize any more advanced filter query expressions by writing them into the Python code alongside the regex( ) function. Then you can call them in your query string.

Now, unless you need something more advanced like regex, then you may think a Python Filter doesn't add much value over the standard Filter array action. But with a good Filter function in a Python script, we can now go one step further & nest the Python Filter in a loop in the script itself. This will give us the functionality of a Filter array action inside an Apply to each loop, but the looping will happen in the much faster/more efficient Python script instead of in the slow Power Automate or Logic Apps Apply to each / For Each loop.

[And if you are more Power Apps minded, the following For Each examples are like ForAll(Lookup( )) and ForAll(Filter( ))]

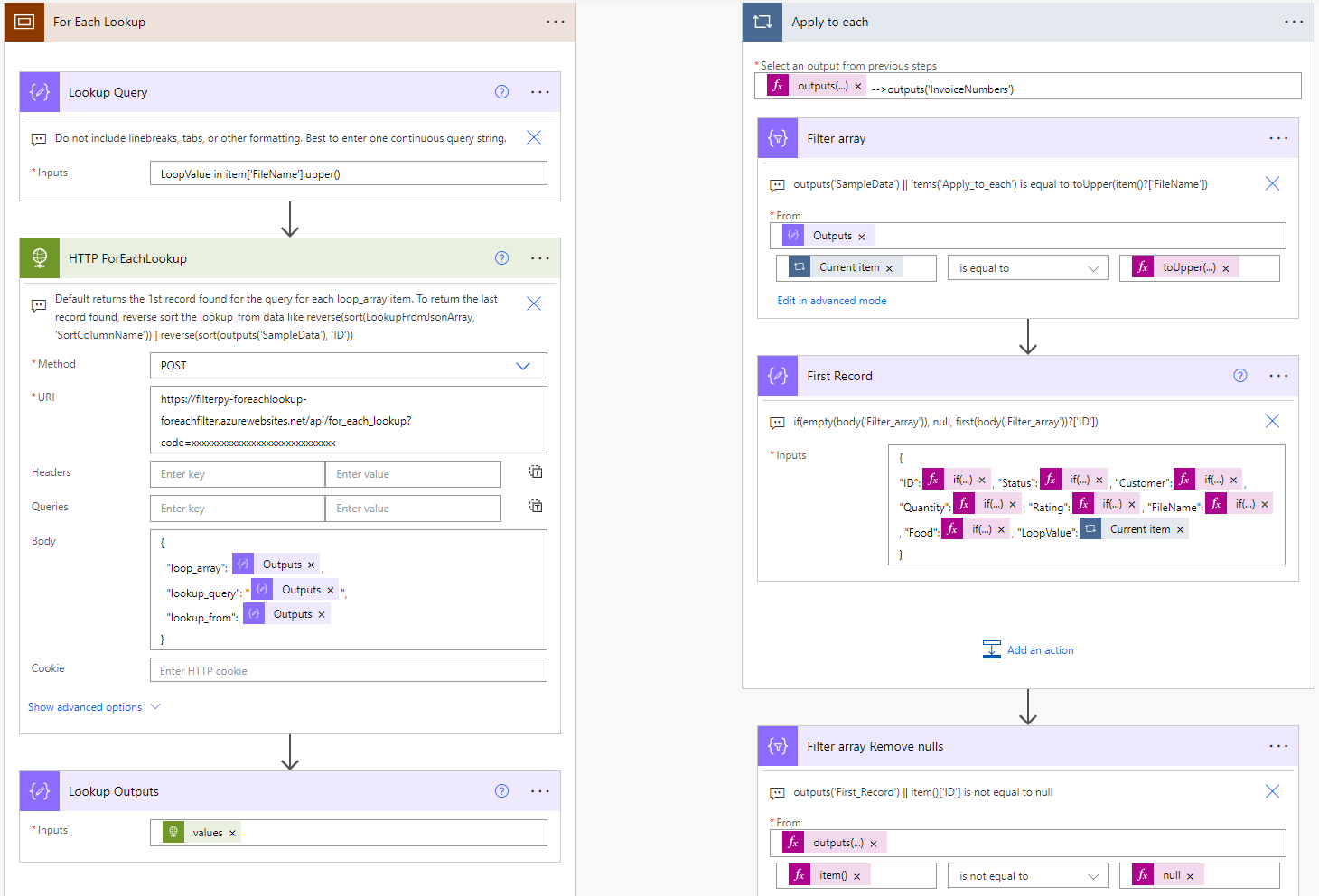

For Each Lookup Example

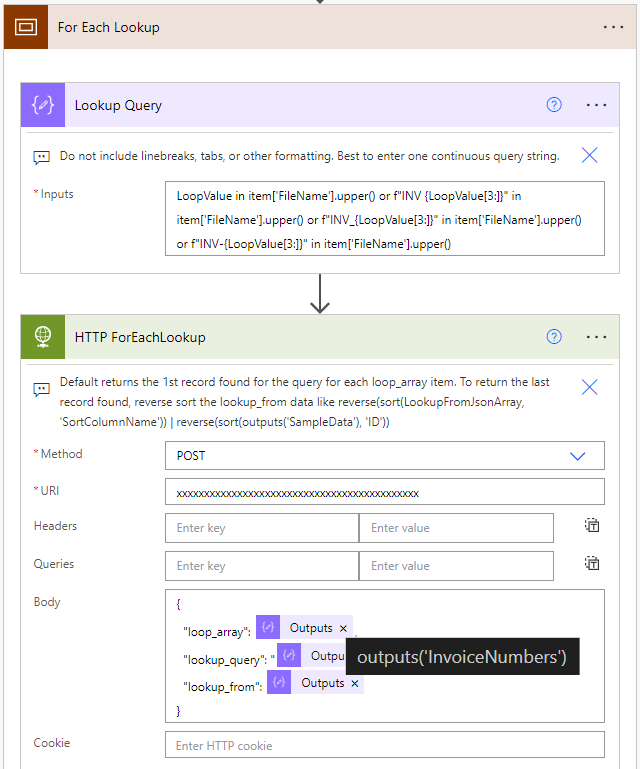

Here we see the ForEachLookup action takes a loop_array input for what array/JSON array to iterate over [like the main input to an Apply to each loop] (in this case we are using the InvoiceNumber array), a lookup_query for our Python query, & a lookup_from for the JSON array / table of data to lookup in. The action will output a JSON array of all the 1st records it found for the lookup_query for each loop_array value. It will also return a no_value_loop_array of each loop_array item where the query did not return any records.

Note we now have a lookup_query of...

where we now add a LoopValue parameter to reference the current item in the loop_array. Note if you feed a JSON array into loop_array then you can also reference specific columns with LoopValue['InsertColumnName'] but here we are using the simple Invoices array so we can just reference LoopValue for the current invoice number in the outer loop. And here we can see we are taking a LoopValue like INV002222 & we are looking for the 1st record where the uppercase FileName contains INV002222, INV 002222, INV_002222, or INV-002222.

Also note if we wanted to get the last record returned by the query instead of the 1st, then we could just reverse sort the lookup_from input by a target column so that the 1st records returned are actually the last records in the source data. Like reverse(sort(outputs('SampleData'), 'ID')).

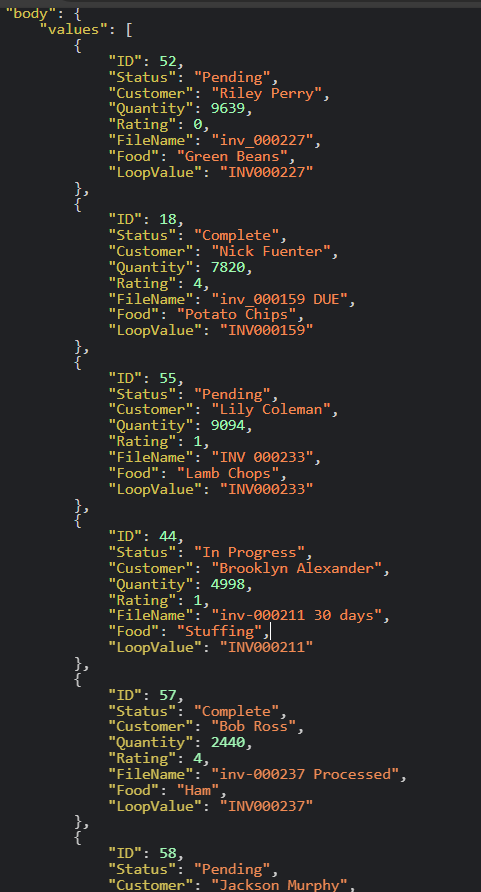

So if we run the For Each Lookup, we get this JSON array output of all the 1st records returned by the query for each invoice number.

Note we also get the invoice number used in each lookup query merged on to each record in the LoopValue field. If we want to we can easily reference that data or even use a Select action to rename that field something like "Invoice".

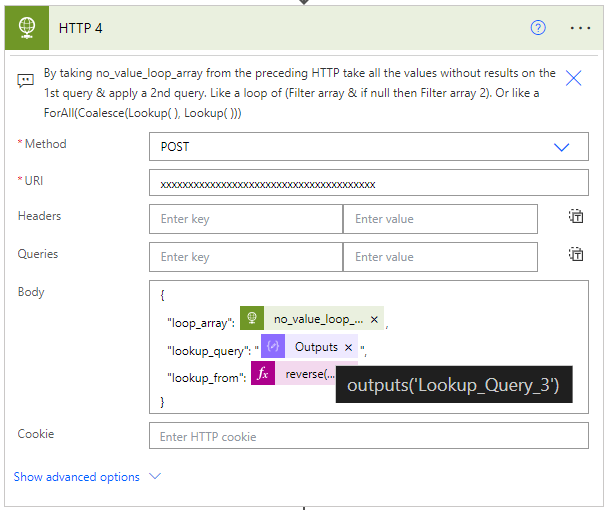

And note that because the action returns all loop_array items where a record was not found to no_value_loop_array, we can stack different queries for something like a ForAll(Coalesce(Lookup( ), Lookup( ))). This is very helpful if we want to check for a record under one query like LoopValue == item['FileName'].upper() but then if it doesn't find anything under that strict query then have it use a more flexible query like LoopValue in item['FileName'].upper() so you can prioritize returning strict matches if they exist, but then fall-back to more flexible matches if the strict match doesn't exist.

For Each Filter Example

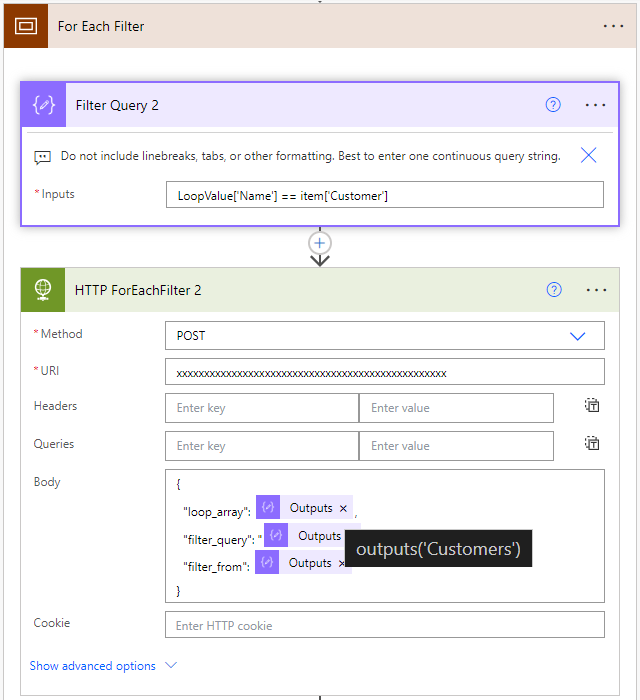

Here we see the ForEachFilter action takes a loop_array input for what array/JSON array to iterate over [like the main input to an Apply to each loop] (in this case we are using the Customers JSON array of contact info), a filter_query for our Python query, & a filter_from for the JSON array / table of data to filter in. The action will output an array of JSON arrays of all the records it found for the filter_query for each loop_array value. It will also return a no_value_loop_array of each loop_array item where the query did not return any records.

Note we now have a filter_query of...

where LoopValue['InsertColumnName'] is referencing the current item / JSON object in the Customers JSON array & referencing the Name column in that JSON object. So this, for each customer name, will return an array with a JSON array containing all that customer's order records. Then it also returns each customer contact record where the query did not return any records.

So if we run this For Each Filter we get an array of JSON arrays. Each JSON array within the array is all the orders for a given customer.

Note that we also get the customer contact record used for each query merged on each record in the LoopValue. So if we need to access the customer data or if we want to pull the data into top-level key-value pairs in a Select action, we can.

Then we also have an additional GroupBy Select action to further process the array of JSON arrays so we can get a single JSON array with each customer's name, order count, sum of quantities, & average rating. This will use some xpath( ) expressions, so if you want this GroupBy functionality then you may find this explanation video helpful (https://youtu.be/z5MxbwURV68?si=uiG13i8xVrz7Yswk). Technically, you could do some For Each Lookups & For Each Filters using just xpath( ) expressions in a non-premium Select action as long as you only need basic operators (no in/contains) & don't need query expressions like upper() or regex. I will likely put out different content on using xpath( ) for basic, non-premium For Each Lookups & For Each Filters later.

So at this GroupBy step our flow run output the following

Speed, Action Calls, & Cost Comparison Example

Let's do a comparison between the standard Filter array in Apply to each vs the Python For Each Lookup.

And we'll turn on a concurrency of 50 to make the standard set-up as fast as possible.

For the 4000 invoice numbers the For Each Lookup function took 4 seconds, 2 actions, & an Azure Function cost of (4 x 1000 x .00012GB x $.000016 = $.000008)

Whereas the Apply to each took 160 seconds, 8000 actions, & a Logic Apps cost of (8000 x $.000125 = $1).

(*We use Logic Apps cost because at higher frequencies or record volumes even a premium Power Automate user license with 40,000 action calls per day can't afford to run this workload & it must be run on Logic Apps.)

Now this is a smaller example so a $1 cost difference may not seem like much, but scale it to a larger dataset, like a 25,000 record loop of 50000 actions (50000 x $.000125 = $6.25) running once a day for an entire year, and you start to see significant savings ($6.25 x 365 = $2,281.25/year).

Import & Set-Up

Find & download the Solution import package at the bottom of this main post. Go to the Power Apps home page (https://make.powerapps.com/). Select Solutions on the left-side menu, select Import solution, Browse your files & select the PYFilterForEachLookupForEachFilter_1_0_0_x.zip

file you just downloaded. Then select Next & follow the menu prompts to apply or create the required connections for the solution flows. And finish importing the solution.

Once the solution is done importing, select the solution name in the list at the center of the screen. Once inside the solution click on the 3 vertical dots next to the flow name & select edit.

The flow should be open, so next we are going to set up the Azure Function for the HTTP actions in the flow.

We want to select to Create a new function app & go through the creation menus.

Make sure to choose Python for the Runtime stack.

Once you get to the Function App resource select Create function under Create in Azure portal, select HTTP trigger, & select Next. Then select Create.

Once in the Function code view you can go back to the flow tab, copy everything in the Azure Function Python Script action, select all the sample code in the editor, & paste the actual function code over the sample code in the editor. Then select to save the function.

Now we can go back to search for Function App again so we can navigate back a few menus to the list of all the Function Apps.

Find the new Function App name in the list of app names & select it. A menu should open up with links to the 3 different HTTP functions you can call from the flow. We need to go to each HTTP function & copy each unique Function URL into their respective HTTP action URIs in the flow.

Python Filter

For Each Lookup

For Each Filter

Now you're ready to test with the sample data or to build these into a workflow with some of your own data.

*Warning: May need to rename some columns if you reference them in the filter(s). I've found column names with underscores _ cause errors.

***If you want the fastest performance or if you are working with larger datasets like filtering through 10,000+ rows over 2000+ loop values, then you may want to use V1b in the Solution / flows. Filtering many times through large datasets may lead the Azure Function to time-out, V1b chunks/batches its filters into multiple concurrent requests.***