Want to get data from an Excel sheet that isn't formatted as a table? Don't want to use any premium connectors?

Use these Office Scripts to detect where the relevant data is & extract it to a JSON array using a specified top header row as field names / JSON keys. Also includes options to specify which columns / fields to return & ways to conditionally filter rows.

Examples

We can start with a simple example where the range / desired table is the only thing on Sheet1 and is flush against the top & left side of the sheet. Also for V1 the table of desired data is less than the max script output size limit of a few MB or about 300,000 cells (keep reading to get to V1b batching that overcomes the data limit).

For this we can set the Run script action to target our desired Example Excel workbook. Then we can specify "Sheet1" in the "sheetName" input. Then the rest of the inputs can be 0 or left empty.

Finally there is a Select action, primarily to show how to convert the integers returned from Excel into date-time format. For dates, Excel returns integers counting the days after 1899-12-30 so we have to use addDays to add the integer number to than starting date in Power Automate.

if(empty(item()?['InsertDateColumnName']), '', addDays('1899-12-30', int(item()?['InsertDateColumnName']), 'u'))

Here we can see the function returned the desired table data in a JSON array & the Select action converted the integers to date-time format.

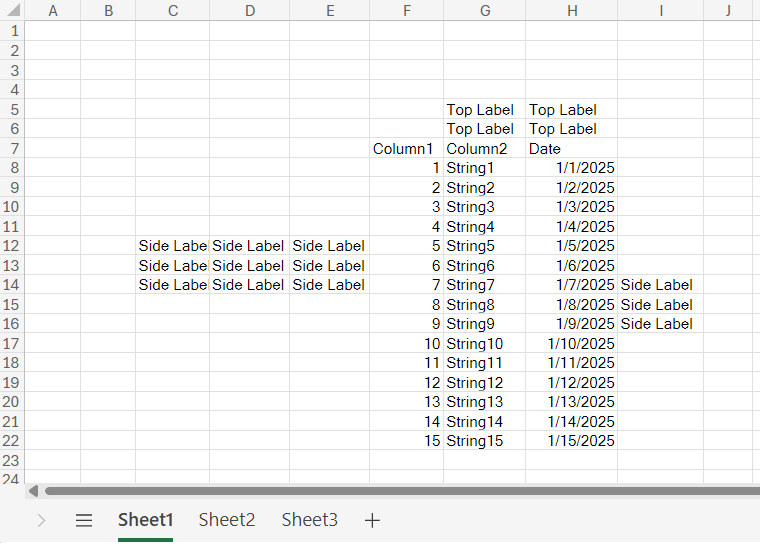

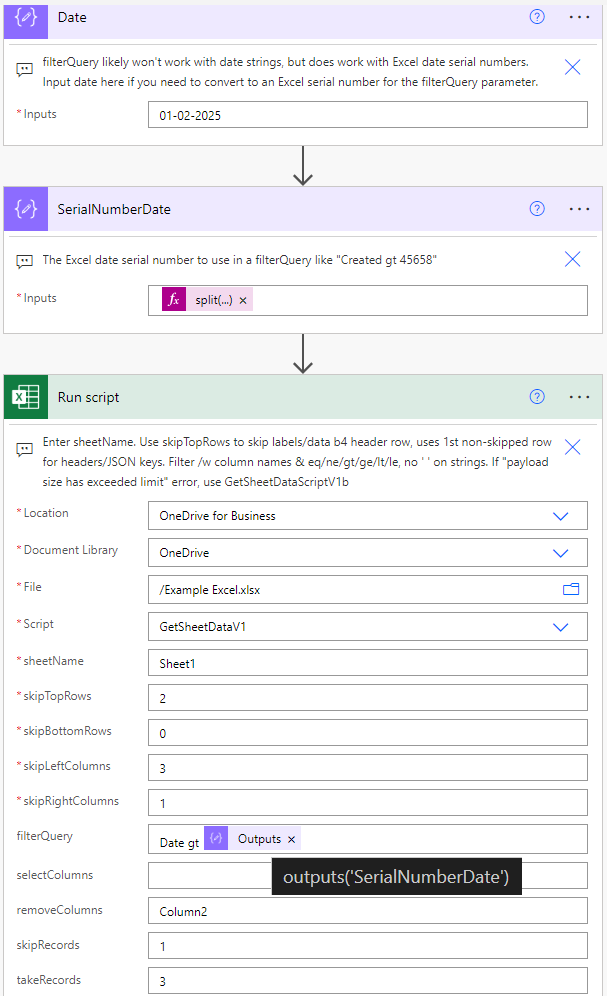

Next let's show a more complicated example with a range / desired table placed randomly on the sheet with some irrelevant data placed all around it.

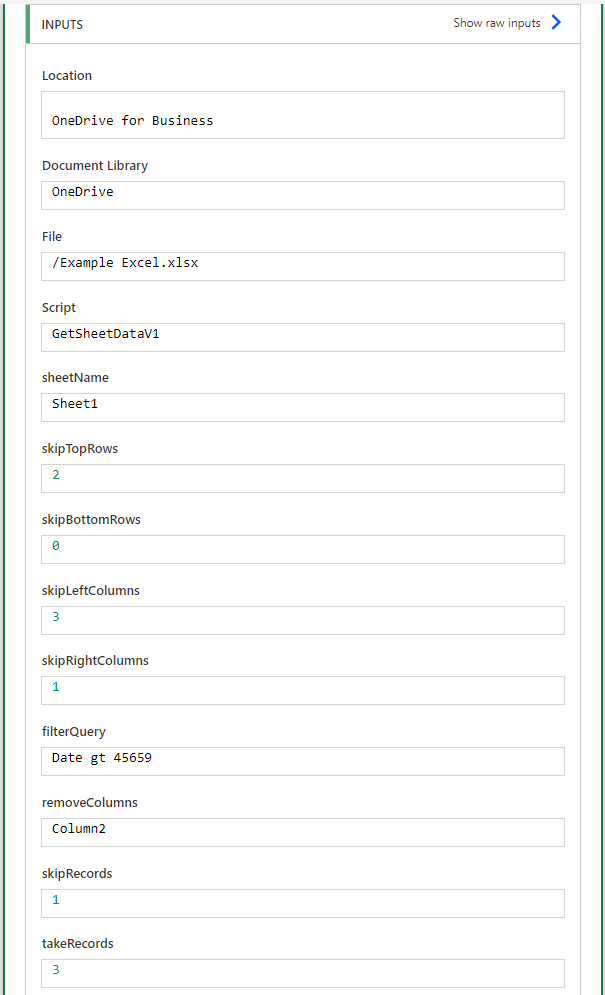

For this one we need to understand that the function will detect the minimum row & minimum column with data in it & start the range from those two indexes. So in this case it will start the range from column C, row 5 and go down to column I, row 22. The function takes the 1st range row & uses it for the column headers / field names / JSON keys. So we want to specify the function should ignore the first 2 rows of label data in the "skipTopRows" parameter. That way it will pick up the 3rd row with the Blank, Blank, Blank, Column1, Column2, Date, Blank as the header row. Then we note the Blank column headers over the columns with irrelevant data pose an issue. We can either go to the Excel sheet & give those columns headers in the headers row or we can specify for the function to skip those columns in the range with the "skipLeftColumns" & "skipRightColumns" parameters. There are 3 columns on the left to skip & 1 column on the right to skip so we can input 3 & 1 respectively for those parameters.

After the inputs are set to get the right range, we can then add our column selections, row filters, skip records, & top records. For this example we want to exclude Column2 so we put Column2 in the "removeColumns" parameter. Alternatively we could have entered "Column1,Date" in the "selectColumns" parameter to get the same result. Then for the rows "filterQuery" we can enter filters similar to odata filters. So things like "Column2 eq String3" (Note no single quotes ' ' around strings here) or "Date gt 45659" (Note dates likely need to be in Excel serial number format). Also note that like the standard Excel connector we can only enter a single filter query conditional, it can not handle "and" or "or" statements. Then in this example we also specify after the range is selected & after the filter query is applied we should skip the 1st record returned ("skipRecords" of 1) and we should take only the top 3 records after that skip ("takeRecords" of 3).

So all of that ultimately returns us these 3 records. The irrelevant data in the range around the table was excluded. Column2 was excluded. The Date gt 45659 (Date gt 01-02-2025) means the 1st 2 records were excluded, skip records of 1 means record 3 was skipped so we start at record 4, and top records of 3 mean we only got records 4,5, & 6, cutting off records 7 to 15.

Now if your desired table data is greater than a few MB, like greater than 300,000 cells, then you may get a "payload size has exceeded limit" error on this V1 flow. In this case you will want to use the V1b flow that can handle desired table data of any size (as long as the final return JSON is less than the Power Automate 100MB limit).

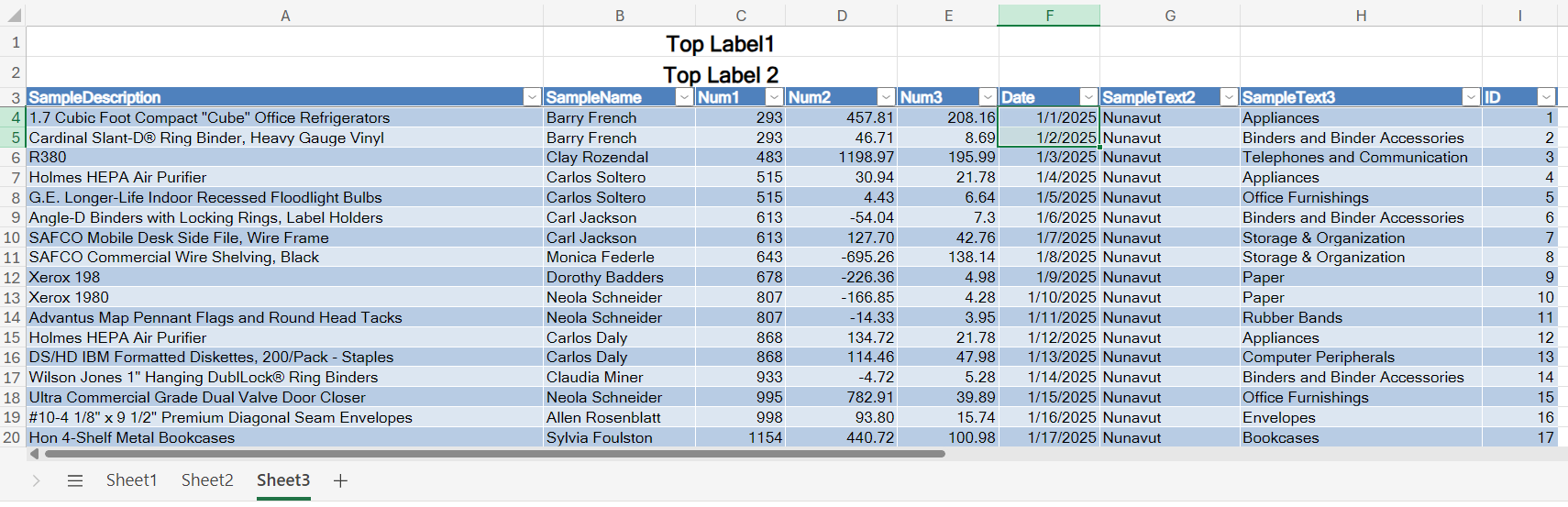

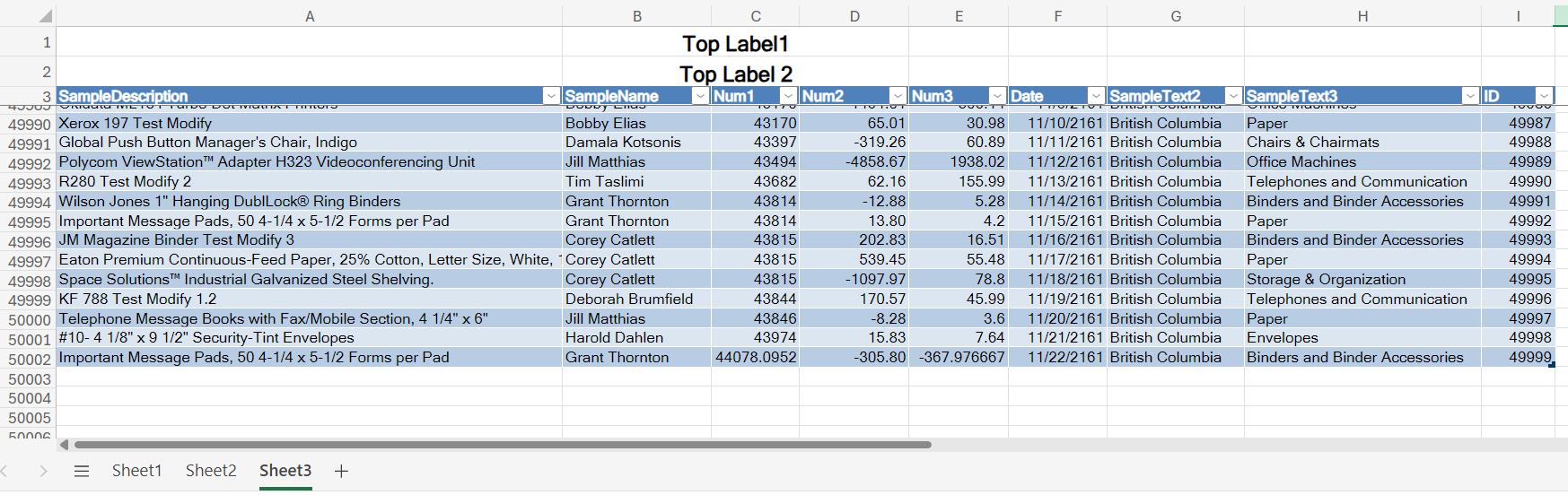

For V1b we can use an example Excel sheet with almost 50,000 rows of data...

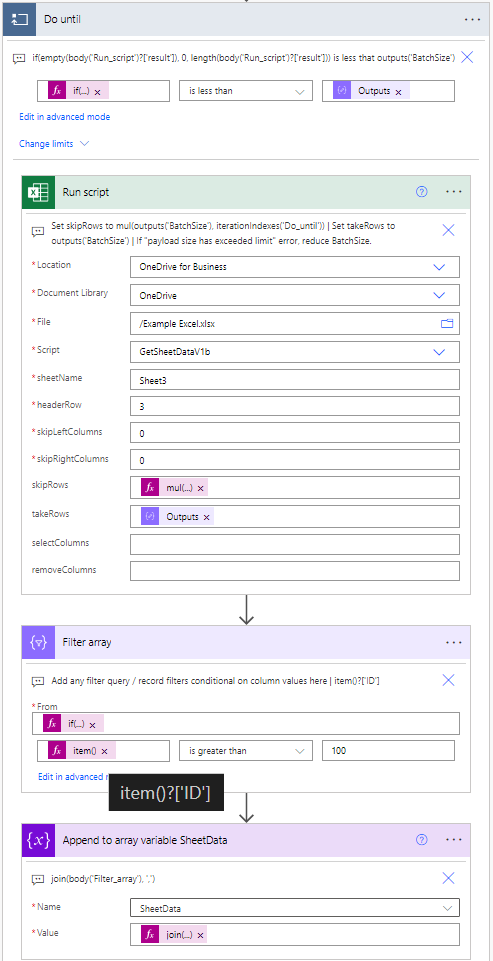

The main piece of the V1b flow is shown below. We essentially have a Run script action set to grab JSON array batches of data & insert them into a variable inside a Do until loop. In this case we target our Example Excel workbook, input our sheetName, input the row number of our headerRow, set the skipRows to skip Loop-Count * BatchSize number of rows & set the takeRows to take BatchSize number of rows (so each loop iteration takes the next BatchSize number of rows before eventually adding them to the variable).

Before adding data to the variable we have a follow-on Filter array action where we can create a condition to filter down our data (filter conditions can include references to column values with expressions like item()?['InsertColumnName']).

Then we add a stingified version of the JSON array to the SheetData variable.

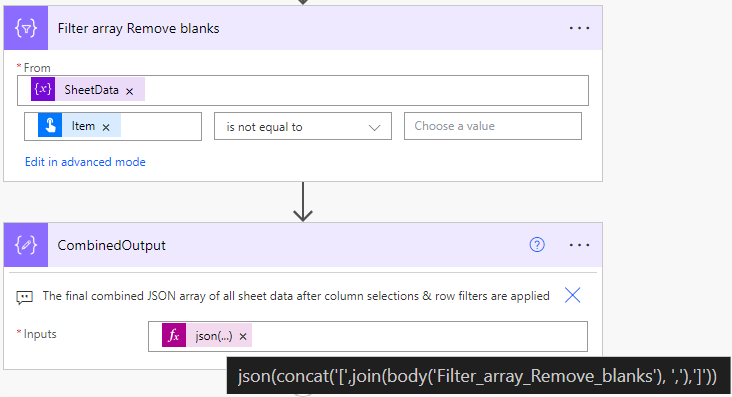

After the Do until loop we have another Filter array action to remove any blanks "" in the array of stringified JSON in case our earlier Filter array action returned no records for a given batch of data. Then in the CombinedOutput action we join all the stringified JSON back together into a single stringified JSON array, add the array brackets '[' ']' to it & convert it to a proper JSON format/type. So the CombinedOutput action will hold the JSON array of all the batches of data put together as if it came out of an Excel list rows present in table action.

So if we run this flow...

In less than a minute we get our output combined JSON array. Note our Filter array action set things to only return records where ID is greater than 100, so our data starts at ID = 101.

And the data ends at ID = 49999.

Import & Set-Up

Find & download the Solution import package at the bottom of this main post. Go to the Power Apps home page (https://make.powerapps.com/). Select Solutions on the left-side menu, select Import solution, Browse your files & select the GetExcelSheetData_1_0_0_xx.zip file you just downloaded. Then select Next & follow the menu prompts to apply or create the required connections for the solution flows. And finish importing the solution.

Once the solution is done importing, select the solution name in the list at the center of the screen. Once inside the solution click on the 3 vertical dots next to the flow name & select edit.

Now that the flow is imported & open, we need to set up the Office Script used to get the Excel sheet data. Copy the script code in the Office Script GetSheetData action.

Navigate to an Excel file on your OneDrive, go to the Automate tab, select New Script, & remove the placeholder script that opens in the Code Editor menu.

Paste the code from the Office Script GetSheetData action into the Code Editor & save the script under a new name. Save the script.

Back in the flow editor tab, delete the PlaceholderValue Delete after import action.

Now you can set up your main Run script action.

For V1 you'll need to set the file Location, file Document Library, File path, Script, sheetName, & other optional parameters.

For V1b you'll need to set the file Location, file Document Library, File path, Script, sheetName, headerRow, skipRows ( mul(outputs('BatchSize'), iterationIndexes('Do_until') ), takeRows ( outputs('BatchSize') ), & other optional parameters.